Keeping knowledge current isn’t just housekeeping—it’s the engine behind faster decisions, fewer repeated questions, and compounding organizational learning. A well-designed content lifecycle for knowledge management turns “we wrote it once” into “we keep it useful.” Below is a pragmatic, expert approach to designing and running a content lifecycle that keeps knowledge fresh, findable, and trusted—without drowning teams in bureaucracy.

Why a Content Lifecycle Matters

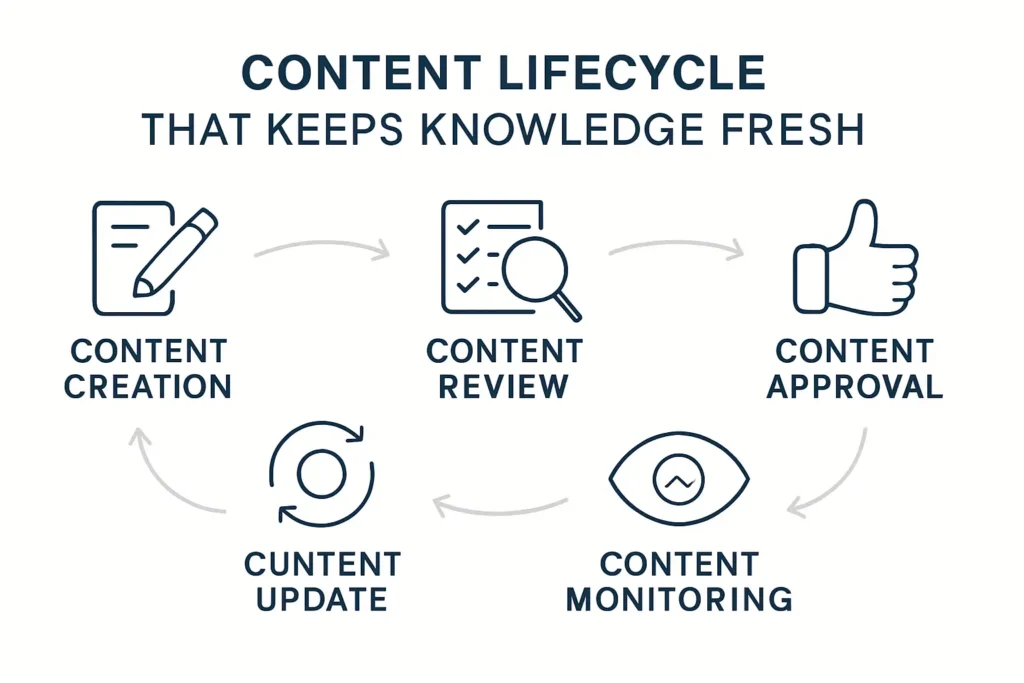

A content lifecycle is the end-to-end process for how knowledge is created, structured, reviewed, updated, distributed, and eventually retired. Done well, it:

- Reduces time-to-answer and duplicate effort

- Increases trust in the knowledge base by aligning with reality

- Reveals gaps and obsolete material before users encounter them

- Turns frontline questions into reusable, high-signal assets

- Supports compliance and risk management with clear ownership and auditability

Think of it as knowledge operations: a predictable rhythm for keeping content living, not static.

Principles for Fresh, Reliable Knowledge

- Continuous over episodic: Small, frequent updates beat big bang rewrites.

- Ownership is explicit: Every page has a named owner and a backup.

- Evidence-driven: Search queries, tickets, and analytics drive the backlog.

- Light, not lax: Minimal viable governance prevents chaos without slowing teams.

- User-centered: Titles, language, and structure match how people actually search and work.

- Measurable: Health metrics guide action, not vanity counters.

The Content Lifecycle Stages

1) Intake and Prioritization

Purpose: Capture requests and ideas, then prioritize based on impact.

What to do:

- Intake channels: central form, chatbot escalation, editorial Slack/Teams channel.

- Sources: top search queries with low CTR, zero-result queries, repeated tickets, SME insights, product changes, policy updates.

- Scoring criteria: frequency x severity x audience size x risk. Add “effort to fix” for quick wins.

Output: A triaged backlog with clear priorities—“fix now,” “schedule,” “park.”

2) Authoring and Structuring

Purpose: Create content that is accurate, reusable, and easy to maintain.

What to do:

- Use templates: purpose, scope, prerequisites, step-by-step, variations, known issues, last verified date.

- Component content: store reusable snippets (definitions, warnings, legal statements, system limits) in one place and transclude them.

- Controlled vocabulary: consistent entity names, product terms, and synonyms.

- Writing standards: short sentences, active voice, task-oriented headings, user-intent alignment.

- Accessibility: scannable headings, lists for tasks, alt text for images, code/CLI blocks for technical steps.

Output: Draft content that’s structurally sound and consistent across authors.

3) Review and Validation

Purpose: Ensure accuracy, compliance, and editorial quality.

What to do:

- Dual-track review: SME for correctness, editor for clarity and style.

- Permissions and privacy: confirm access levels, scrub PII, apply labels (internal, partner, public).

- Versioning: semantic versions for major/minor changes; changelog notes summarizing what changed and why.

- SLA targets: e.g., high-impact updates reviewed within 48 hours; routine within 5 business days.

Output: Approved content with an audit trail.

4) Publish and Distribute

Purpose: Get knowledge to the right people and systems.

What to do:

- Multichannel delivery: intranet, help center, agent-assist sidebars, in-app guides, chatbots.

- Smart metadata: tags for product, audience, role, version, region, compliance.

- Search optimization: task-first titles, strong H1/H2s, synonyms, featured snippets for common tasks, canonical links to avoid duplicates.

- Notifications: change digests to relevant teams; “what changed” summaries for subscribers.

Output: Published content that is discoverable and contextual.

5) Feedback and Observability

Purpose: Turn usage into continuous improvement signals.

What to track:

- Search analytics: top queries, zero-result rate, reformulation rate, abandonment.

- Content analytics: views, dwell time, scroll depth, exit rate, thumbs up/down, “was this helpful” comments.

- Support signals: deflection rate, time-to-resolution impact, macro adoption.

- Freshness signals: pages nearing review deadlines, orphaned content, duplicate clusters.

Output: A prioritized improvement list driven by data, not opinion.

6) Maintenance and Refresh

Purpose: Keep knowledge current and reduce debt.

What to do:

- Review cadence: assign review intervals by risk (e.g., 30–60–90–180 days). Show “last verified” and “next review” prominently.

- Owner accountability: owners receive automated reminders; missed SLAs escalate.

- Merged updates cascade: update the source snippet, and it updates everywhere it’s used.

- Deprecation and archiving: sunset criteria, redirect old URLs, maintain a quick “what replaced this” note.

- Contradiction checks: detect overlapping or conflicting pages and consolidate.

Output: A living library where old content is either updated or gracefully retired.

Governance Without Gridlock

- RACI for each page: owner, reviewer, publisher, approver.

- Editorial board: monthly 30–60 minutes to review metrics and unblock decisions.

- Guardrails: publishing permissions by space or audience; change thresholds that require extra review.

- Compliance hooks: tag content subject to legal, security, or regulatory review; define shorter SLAs for these.

Keep policy succinct—one page of rules beats a manual no one reads.

The Minimal Metrics That Matter

- Findability: zero-result query rate, search reformulation rate, CTR from search.

- Utility: time-to-answer, article-assisted resolution rate, thumbs up ratio.

- Freshness: percent pages within SLA, median age since last verification.

- Quality: duplicate rate, orphaned page count, contradiction incidents.

- Business impact: time-to-proficiency for new hires, cycle time reduction, case deflection.

Set benchmarks, then review monthly. Use metrics to celebrate wins and focus effort.

Operating Rhythm: A 30-60-90 Flow

- Weekly: triage intake, review top search failures, ship quick fixes.

- Biweekly: publish sprint—bundle related updates for visibility and training.

- Monthly: health review—freshness scorecard, top gaps, deprecation list, owner performance.

- Quarterly: taxonomy tune-up, template improvements, cross-team content audit.

Consistency beats intensity. Small, predictable cycles build trust.

Taxonomy, Tags, and Knowledge Graphs

- Start simple: product, process, role, region, lifecycle stage.

- Synonyms and aliases: reflect how users actually search (acronyms, old names, competitor terms).

- Entities and relationships: connect people, processes, systems, policies; enable richer discovery.

- Don’t overdo it: too many tags reduce consistency. Aim for 5–8 high-signal fields.

A lean taxonomy paired with synonyms can produce outsized gains in search and reuse.

AI in the Content Lifecycle—With Guardrails

- Draft acceleration: generate first drafts from tickets or transcripts; humans finalize.

- Summarization: extract FAQs, steps, and change summaries for “what’s new.”

- Quality checks: style, reading level, contradiction detection, PII scanning.

- Retrieval: hybrid search (BM25 + vector) to surface semantically relevant content.

- Evaluate: measure AI answer accuracy, citation coverage, and task success before expanding scope.

Ground everything in approved content, honor permissions, and keep humans in the loop.

Roles That Make It Work

- Content Owner: accountable for accuracy and freshness.

- SME Reviewer: validates correctness and risks.

- Editor: ensures clarity, structure, and consistency.

- Platform Admin: manages metadata, permissions, and integrations.

- KM Lead: runs the operating rhythm and reporting.

Small orgs can combine roles; keep accountability explicit.

Templates That Save Time

- Task article: When to use it, prerequisites, steps, variations, troubleshooting, last verified.

- Concept article: Definition, why it matters, key principles, related tasks.

- Reference: Parameters, limits, examples, common pitfalls.

- Release note: What changed, who’s affected, actions required, links to updated pages.

Templates reduce cognitive load and increase maintainability.

Common Pitfalls and How to Avoid Them

- Pile-ups without owners: Require an owner for every page; archive ownerless content after a grace period.

- Sprawl from copy-paste: Use transclusion for shared snippets; centralize definitions and warnings.

- Big-batch reviews: Switch to rolling reviews with short SLAs and small changesets.

- Vanity metrics: Replace “page views” with “task completion” and “deflection.”

- Botched deprecations: Redirect URLs, annotate replacements, and notify subscribers.

Implementation Blueprint (Lean and Effective)

- Week 1–2: Define 2–3 KPIs, assign content ownership, set review SLAs, adopt templates.

- Week 3–4: Instrument search and content analytics; establish intake; build a prioritized backlog.

- Week 5–6: Fix high-impact search failures; add synonyms; refactor top 20 pages for structure.

- Week 7–8: Launch review reminders; set up snippet library; turn on freshness labels.

- Week 9–10: Deprecate or merge duplicates; enforce redirects; publish “what changed” digest.

- Week 11–12: Run the first monthly health review; adjust taxonomy; lock in the cadence.

Keep the scope tight. Momentum is the goal.

Tooling Guidance (Tech-Agnostic)

- Authoring: Wiki or headless CMS with templates and approval workflows.

- Storage and reuse: Component content/snippets and versioning.

- Discovery: Hybrid search with synonyms, boosting, and facets.

- Analytics: Search logs, content performance, freshness dashboard.

- Automation: Review reminders, publishing digests, redirects on deprecation.

- Integrations: CRM/ITSM for source signals; agent assist for delivery; SSO for permissions.

Choose tools that fit processes—not the other way around.

What “Fresh” Looks Like in Practice

- Every article shows “last verified” and the accountable owner.

- The 50 most-used pages are reviewed at least quarterly.

- Zero-result queries are below a set threshold and trending down.

- Redundant content is redirected to a single canonical source.

- Release notes consistently link to updated knowledge pages.

- Editors publish a monthly “what changed” with 5–10 notable updates.

That’s a living knowledge base—trusted, current, and measurably useful.